How Satellites Use Game Theory to Fly in Perfect Formation — Simulated in MATLAB

Introduction & Motivation

Why Do Satellites Need to Play Games in Space?

Modelling the Satellites

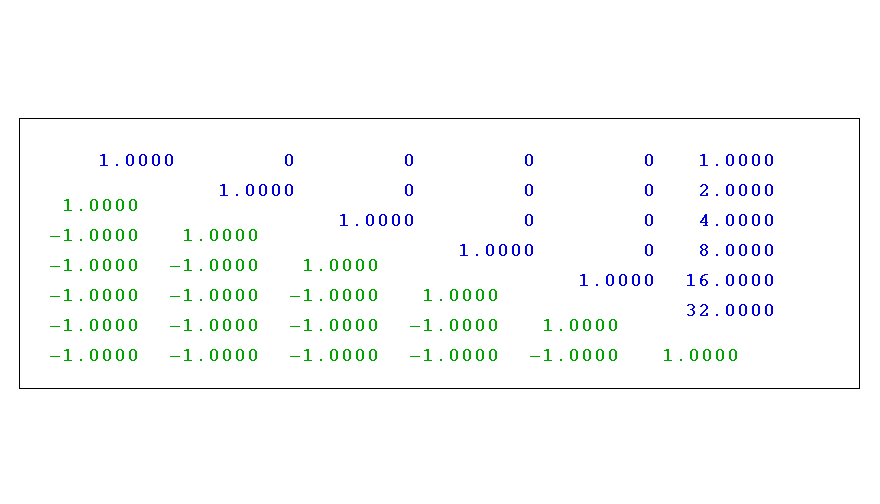

In MATLAB, we construct this using blkdiag for the overall A matrix and control input matrices B1 ,B2 ,B3.

What are they optimizing?

- Stay close to the desired trajectory,

- Use as little thrust as possible (to save fuel).

Solving the differential game in MATLAB

Where P1,P2,P3are the matrices we are solving for and$ S_i = B_iR_i^{-1}B_i^\top $. These equations are coupled and nonlinear, so we can’t solve them directly in one step. Instead, we’ll use MATLAB to solve them numerically. Here I will explain two methods we can use but other options can be available.

Method 1) Iterative Best-Response Dynamics

The idea of the algorithm can be summarized as:

- Initialize \(P_1\), \(P_2\), and \(P_3\).

- For each \(i \in \{1, 2, 3\}\), solve:\(P_i = \mathrm{icare}\bigl(A – \sum_{j \neq i} S_j P_j, B_i, Q_i, R_i\bigr)\)

- Repeat until convergence:\(\|P_i^{(i)} – P_i^{(i-1)}\| \leq \epsilon\)

We initialize each Pi with a common matrix P, often obtained from a centralized LQR solution or using icare. This gives us a feasible and symmetric starting point.

- At each iteration, each satellite solves a modified algebraic Riccati equation (CARE).

- The guessed matrices (P1, P2, P3) are adjusted.

- This is essentially a best-response update: each player assumes others are fixed and computes its own optimal response.

- The loop continues until all three matrices stop changing significantly ( the while condition is not satisfied).

Method 2) Direct Nonlinear System Solver

The second approach treats the Riccati system as a single block of nonlinear equations. We parameterize each symmetric matrix using its upper-triangular entries and define a residual function over all unknowns. This results in a system of 3n(n+1)/2equations and we then solve

F(P1, P2, P3) = 0

- Encodes each as a vector of unique upper-triangular elements,

- Constructs the residuals of the Riccati equations,

- Uses fsolve to drive the residual norm toward zero,

- Verifies solution symmetry and positive-definiteness.

- fsolve attempts to find the roots of the residual equations (i.e., where they evaluate to zero).

- We use a trust-region algorithm, suitable for large systems.

- A tight tolerance ensures high-accuracy convergence for sensitive Riccati solutions.

Going into more details on the function solveRiccatiFsolve note that we take as input the system matrices A, cost matrices Q1,Q2 and Q3, and weighting matrices S1,S2 and S3. We also accept optional settings for the optimization routine. Inside the body of the function significant parts of the code are:

The choice of initial guess is critical for convergence. The initial guess is chosen in the same way as in Method 1). Note that we only extract the upper triangular elements (since the matrices are symmetric) and flatten them into a single vector x0 that fsolve will work with. This vectorization approach is key to using MATLAB’s optimization tools effectively. Moving on:

- Convert it back to matrix form

- Evaluate the left-hand side of each equation

- Since the right-hand side is zero, these values are our residuals

- We flatten only the upper triangular parts of these residual matrices into a vector

Simulation Results:

With the feedback matrices P1, P2 and P3 computed, we can now simulate how our satellites behave in closed-loop formation. This is where the theory meets reality.

We plug the optimal strategies back into the system. The control law for each satellite is:

ui⋆ = -Ri−1 Bi⊤ Pi x

This leads to a closed-loop system of the form:

ẋ = (A − ∑i=13 Si Pi) x

Note that in this specific scenario both methods gave the same solutions for P1 ,P2,P3.

Simulations

What the Results Show

- Category:

- MATLAB

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.