Deep Learning and IoT Workshop at GHC 18, Grace Hopper Celebration of Women in Computing

Dear friends, we: Louvere Walker-Hannon, an application engineer who assists customers doing deep learning and data analytics, Shruti Karulkar, a quality engineering lead for test and measurement, and Anoush Najarian, a performance engineer, put together this blog post on behalf of the MathWorks GHC team.

Our team had an awesome time at GHC 18, Grace Hopper Celebration of Women in Computing. Going to the conference helped our team members get to know each other, and brought out superpowers we didn’t know existed!

This is our first year as a sponsor of the conference.Grace Hopper Celebration is the world’s largest gathering of women technologists. Besides recruiting and attending key technology talks, our team delivered a hands-on MATLAB workshop on Deep Learning and IoT.

The workshop

We were thrilled to have a hands-on workshop proposal accepted at GHC, an honor and a responsibility. It turned out that we were going to be running two large sessions, full in preregistration.

We asked everyone to bring a laptop with a webcam, or share. Participants used their browser to run deep learning code in MATLAB Online, a cool framework built on top of cloud instances, and aggregated inference data to ThingSpeak, an open IoT (Internet of Things) platform, and analyzed that data.

Fruit caper

Everyone captured images of real-world objects using webcams and used a Deep Learning network to classify them. To make things more fun, we used fruit for inference: Granny Smith apples, oranges, lemons and bananas. Our team went on a “fruitcase” expedition: we visited a local grocery store with a suitcase, bought a bunch of fruit for the workshop, and at the end of the day, gave it away to the many amazing GHC workers.

Exercises

Our workshop had three exercises, and two take-home problems.

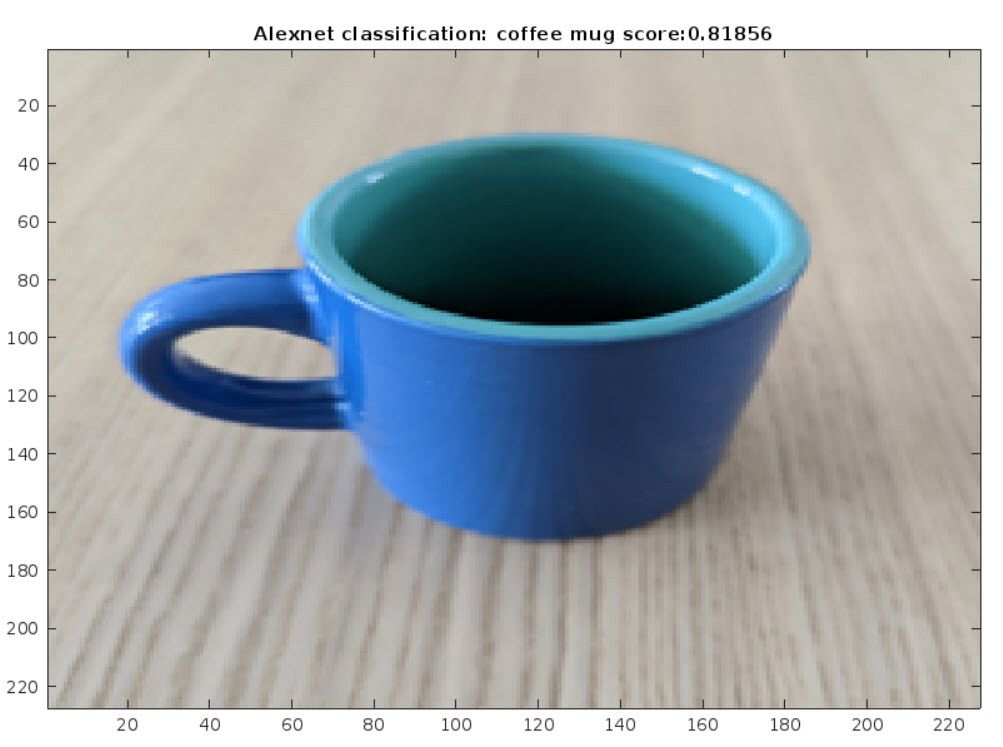

In our first exercise, we used a webcam to capture an image, and passed it along to the AlexNet Deep Learning network for inference, generating a classification label and a confidence score.

% This is code for Exercise 1 as part of the Hands on with Deep Learning % and IoT workshop presented at the Grace Hopper Celebration 2018-09-27 %% Connecting to the camera camera = webcam(1); % Connect to the camera %% Loading the neural net named: Alexnet nnet = alexnet; % Load the neural net %% Capturing and classifying image data picture = snapshot(camera); % Take a picture picture = imresize(picture, [227, 227]); % Resize the picture [label, scores] = classify(nnet, picture); % Classify the picture and % obtain confidence score [sorted_scores, ~]=sort(scores, 'descend'); % Sorting scores in % descending order image(picture); % Show the picture title(['Alexnet classification: ', char(label), ' score:', ... num2str(sorted_scores(1))]); % Show the label clear camera drawnow;

In our second exercise, we repeat what we did in Exercise 1, and post inference data to an IoT channel. Note that we use the same channel to aggregate everyone’s data.

% This is code for Exercise 2 as part of the Hands on with Deep Learning % and IoT workshop presented at the Grace Hopper Celebration 2018-09-27 %% Connecting to the camera camera = webcam(1); % Connect to the camera %% Loading the neural net named: Alexnet nnet = alexnet; % Load the neural net %% Capturing and classifying image data picture = snapshot(camera); % Take a picture picture = imresize(picture, [227, 227]); % Resize the picture [label, scores] = classify(nnet, picture); % Classify the picture and % obtain confidence score [sorted_scores, ~]=sort(scores, 'descend'); % Sorting scores in % descending order image(picture); % Show the picture title(['Alexnet classification: ', char(label), ' score:', ... num2str(sorted_scores(1))]); % Show the label clear camera drawnow; %% Aggregating label data to open IoT platform try thingSpeakWrite(123456789, string(label), 'WriteKey', 'XXXYYYZZZ') catch pause(randi(5)) end

In our third exercise, we grabbed the aggregated inference data from the IoT channel and visualized it. It was fun and a bit surprising to see what everyone’s objects ended up getting classified as.

% This is code for Exercise 3 as part of the Hands on with Deep Learning % and IoT workshop presented at the Grace Hopper Celebration 2018-09-27 %% Reading aggregated label data for the last 2 hours from ThingSpeak readChannelID = 570969; LabelFieldID = 1; readAPIKey = ''; dataForLastHours = thingSpeakRead(readChannelID, ... 'Fields', LabelFieldID, 'NumMinutes', 5, ... 'ReadKey', readAPIKey, 'OutputFormat', 'table'); %% Visualizing data using a histogram if (not(isempty(dataForLastHours))) labelsForLastHours = categorical(dataForLastHours.Label); numbins = min(numel(unique(labelsForLastHours)), 20); histogram(labelsForLastHours, 'DisplayOrder', 'descend', ... 'NumDisplayBins', numbins); xlabel('Objects Detected'); ylabel('Number of times detected'); title('Histogram: Objects Detected by Deep Learning Network'); set(gca, 'FontSize', 10) end drawnow

When our participants ran this code, we saw a histogram aggregating everyone’s inference data, with all the objects detected during the workshop. This is the power of IoT! Check out the data from all our workshop sessions aggregated together on the ThingSpeak channel.

As take-home exercises, we challenged participants to use GoogLeNet instead of AlexNet, and to create their own IoT channel and use it to post and analyze data.

Feedback

It’s an honor to have a speaking proposal accepted at GHC, and delivering large hands-on sessions is a big responsibility.

We loved hearing from our participants on social media:

We heard from professors and AI and Deep Learning enthusiasts who are interested in using our materials on campuses and at maker events: below are the first two, and a few others are in the works! If you’d like to give our Deep Learning and IoT demo a shot, let us know in the comments.

Hope Rubin of our GHC team led STEM Ambassadors who brought this Deep Learning and IoT demo to the Boston Mini-Maker Faire.

Under the leadership of brave Professor Sonya Hsu and her ACM-W partners, a team ran the workshop during the Science Day at the University of Louisiana at Lafayette. Look for posts on these events on this blog!

Thank you

We couldn’t have done this without our team members’ extensive experience with teaching and tech, the awesome guidance by our senior leaders, and the help from hundreds of MathWorkers and Boston SWE friends.

Boston SWE, Society of Women Engineers, one of the oldest and largest sections in the country, has been our rock! We ran our workshop to a SWE event at MathWorks the week before GHC, getting our code and materials in front of many inquisitive, engaged participants who gave us their time, their words of encouragement, and who asked us tough questions!

Next steps

You too are welcome to use our GHC 18 Deep Learning and IoT workshop materials!

Want to learn more? Take the free Deep Learning Onramp! Learn about and build IoT projects.

Visit our GHC page to meet our team and learn about working at MathWorks. Take a look at the photos our team took, or was given by session chairs, and our Twitter Moment. While you’re at it, check out the work of awesome women we have been highlighting with #shelovesmatlab hashtag! Share your thoughts in the comments.

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

댓글

댓글을 남기려면 링크 를 클릭하여 MathWorks 계정에 로그인하거나 계정을 새로 만드십시오.