LINPACK, Linear Equation Package

The ACM Special Interest Group on Programming Languages, SIGPLAN, expects to hold the fourth in a series of conferences on the History of Programming Languages in 2020, see HOPL-IV. The first drafts of papers are to be submitted by August, 2018. That long lead time gives me the opportunity to write a detailed history of MATLAB. I plan to write the paper in sections, which I'll post in this blog as they are available. This is the third such installment.

LINPACK and EISPACK are Fortran subroutine packages for matrix computation developed in the 1970's. They are a follow-up to the Algol 60 procedures that I described in my blog post about the Wilkinson and Reinsch Handbook. They led to the first version of MATLAB. This post is about LINPACK. My previous post was about EISPACK.

Contents

Cart Before the Horse

Logically a package of subroutines for solving linear equations should come before a package for computing matrix eigenvalues. The tasks addressed and the algorithms involved are mathematically simpler. But chronologically EISPACK came before LINPACK. Actually, the first section of the Handbook is about linear equations, but we pretty much skipped over that section when we did EISPACK. The eigenvalue algorithms were new and we felt it was important to make Fortran translations widely available.

LINPACK Project

In 1975, as EISPACK was nearing completion, we proposed to the NSF another research project investigating methods for the development of mathematical software. A byproduct would be the software itself.

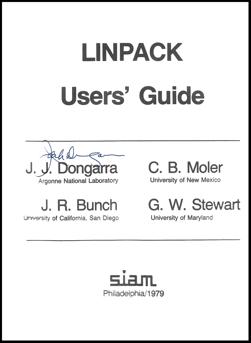

The four principal investigators, and eventual authors of the Users' Guide, were

- Jack Dongarra, Argonne National Laboratory

- Cleve Moler, University of New Mexico

- James Bunch, University of California, San Diego

- G. W. (Pete) Stewart, University of Maryland

The project was centered at Argonne. The three of us at universities worked at our home institutions during the academic year and we all got together at Argonne in the summer. Dongarra and I had previously worked on EISPACK.

Contents

Fortran subroutine names at the time were limited to six characters. We adopted a naming convention with five letter names of the form TXXYY. The first letter, T, indicates the matrix data type. Standard Fortran provides three such types and, although not standard, many systems provide a fourth type, double precision complex, COMPLEX*16. Unlike EISPACK which follows Algol and has separate arrays for the real and imaginary parts of a complex matrix, LINPACK takes full advantage of Fortran complex. The first letter can be

S Real D Double precision C Complex Z Complex*16

The next two letters, XX, indicate the form of the matrix or its decomposition. (A packed array is the upper half of a symmetric matrix stored with one linear subscript.)

GE General GB General band PO Positive definite PP Positive definite packed PB Positive definite band SI Symmetric indefinite SP Symmetric indefinite packed HI Hermitian indefinite HP Hermitian indefinite packed TR Triangular GT General tridiagonal PT Positive definite tridiagonal CH Cholesky decomposition QR Orthogonal triangular decomposition SV Singular value decomposition

The final two letters, YY, indicate the computation to be done.

FA Factor SL Solve CO Factor and estimate condition DI Determinant, inverse, inertia DC Decompose UD Update DD Downdate EX Exchange

This chart shows all 44 of the LINPACK subroutines. An initial S may be replaced by any of D, C, or Z. An initial C may be replaced by Z. For example, DGEFA factors a double precision matrix. This is probably the most important routine in the package.

CO FA SL DI SGE * * * * SGB * * * * SPO * * * * SPP * * * * SPB * * * * SSI * * * * SSP * * * * CHI * * * * CHP * * * * STR * * * SGT * SPT *

DC SL UD DD EX SCH * * * * SQR * * SSV *

Following this naming convention the subroutine for computing the SVD, the singular value decomposition, fortuitously becomes SSVDC.

Programming Style

Fortran at the time was notorious for unstructured, unreadable, "spaghetti" code. We adopted a disciplined programming style and expected people as well as machines to read the codes. The scope of loops and if-then-else constructions were carefully shown by indenting. Go-to's and statement numbers were used only to exit blocks and properly handle possibly empty loops.

In fact, we, the authors, did not actually write the final programs, TAMPR did. TAMPR was a powerful software analysis tool developed by Jim Boyle and Ken Dritz at Argonne. It manipulates and formats Fortran programs to clarify their structure. It also generates the four data type variants of the programs. So our source code was the Z versions and the S, D, and C versions, as well as "clean" Z versions, were producing by TAMPR.

This wasn't just a text processing task. For example, in ZPOFA, the Cholesky factorization of a positive definite complex Hermitian matrix, the test for a real, positive diagonal is

if (s .le. 0.0d0 .or. dimag(a(j,j)) .ne. 0.0d0) go to 40

In the real version DPOFA there is no imaginary part, so this becomes simply

if (s .le. 0.0d0) go to 40

BLAS

All of the inner loops are done by calls to the BLAS, the Basic Linear Algebra Subprograms, developed concurrently by Chuck Lawson and colleagues. On systems that did not have optimizing Fortran compilers, the BLAS could be implemented efficiently in machine language. On vector machines, like the CRAY-1, the loop is a single vector instruction.

The two most important BLAS are the inner product of two vectors, DDOT, and vector plus scalar times vector, DAXPY. All of the algorithms are column oriented to conform to Fortran storage of two-dimensional arrays and thereby provide locality of memory access.

Portability

The programs avoid all machine dependent quantities. There is no need for anything like the EISPACK parameter MACHEP. Only one of the algorithms, the SVD, is iterative and involves a convergence test. Convergence is detected as a special case of a negligible subdiagonal element. Here is the code for checking if the subdiagonal element e(l) is negligible compared to the two nearby diagonal elements s(l) and s(l+1). I have included enough of the code to show how TAMPR displays structure by indentation and loop exits by comments with an initial c.

do 390 ll = 1, m

l = m - ll

c ...exit

if (l .eq. 0) go to 400

test = dabs(s(l)) + dabs(s(l+1))

ztest = test + dabs(e(l))

if (ztest .ne. test) go to 380

e(l) = 0.0d0

c ......exit

go to 400

380 continue

390 continue

400 continuePublisher

No commercial book publisher would publish the LINPACK Users' Guide. It did not fit into their established categories; it was neither a textbook nor a research monograph and was neither mathematics nor computer science.

Only one nonprofit publisher, SIAM, was interested. I vividly remember sitting on a dock in Lake Mendota at the University of Wisconsin-Madison during a SIAM annual meeting, talking to SIAM's Executive Director Ed Block, and deciding that SIAM would take a chance. The Guide has turned out to be one of SIAM's all-time best sellers.

A few years later, in 1981, Beresford Parlett reviewed the publication in SIAM Review. He wrote

It may seem strange that SIAM should publish and review a users' guide for a set of Fortran programs. Yet history will show that SIAM is thereby helping to foster a new aspect of technology which currently rejoices in a name mathematical software. There is as yet no satisfying characterization of this activity, but it certainly concerns the strong effect that a computer system has on the efficiency of a program.

LINPACK Benchmark

Today, LINPACK is better known as a benchmark than as a subroutine library. I wrote a blog post about the LINPACK Benchmark in 2013.

In Retrospect

In a sense, the LINPACK and EISPACK projects were failures. We had proposed research projects to the NSF to "explore the methodology, costs, and resources required to produce, test, and disseminate high-quality mathematical software." We never wrote a report or paper addressing those objectives. We only produced software

References

J. Dongarra and G. W. Stewart, "LINPACK, A Package for Solving Linear Systems", chapter 2 in W. Cowell, 29 pages, Sources and Development of Mathematical Software, Prentice Hall, 1984, PDF available.

J. J. Dongarra, J. R.Bunch, C. B. Moler and G. W. Stewart, LINPACK User's Guide, SIAM, 1979, 344 pages, LINPACK User's Guide.

C. Lawson, R. Hanson, D. Kincaid and F. Krogh, "Basic Linear Algebra Subprograms for Fortran Usage, ACM Trans. Math. Software vol. 5, 308-371, 1979. Reprint available.

J. Boyle and K. Dritz, "An automated programming system to aid the development of quality mathematical software," IFIP Proceedings, North Holland, 1974.

- Category:

- History,

- Matrices,

- Numerical Analysis

Cleve’s Corner: Cleve Moler on Mathematics and Computing

Cleve’s Corner: Cleve Moler on Mathematics and Computing The MATLAB Blog

The MATLAB Blog Guy on Simulink

Guy on Simulink MATLAB Community

MATLAB Community Artificial Intelligence

Artificial Intelligence Developer Zone

Developer Zone Stuart’s MATLAB Videos

Stuart’s MATLAB Videos Behind the Headlines

Behind the Headlines File Exchange Pick of the Week

File Exchange Pick of the Week Hans on IoT

Hans on IoT Student Lounge

Student Lounge MATLAB ユーザーコミュニティー

MATLAB ユーザーコミュニティー Startups, Accelerators, & Entrepreneurs

Startups, Accelerators, & Entrepreneurs Autonomous Systems

Autonomous Systems Quantitative Finance

Quantitative Finance MATLAB Graphics and App Building

MATLAB Graphics and App Building

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.