The LINPACK Benchmark

By reaching 33.86 petaflops on the LINPACK Benchmark, China's Tianhe-2 supercomputer has just become the world's fastest computer. The technical computing community thinks of LINPACK not as a matrix software library, but as a benchmark. In that role LINPACK has some attractive aspects, but also some undesirable features.

Contents

Tianhe-2

An announcement made last week at the International Supercomputing Conference in Leipzig, Germany, declared the Tianhe-2 to be the world's fastest computer. The Tianhe-2, also known as the MilkyWay-2, is being built in Gunagzho, China by China's National University of Defense Technology. Tianhe-2 has 16,000 nodes, each with two Intel Xeon IvyBridge processors and three Xeon Phi processors, for a combined total of 3,120,000 computing cores.

Top500

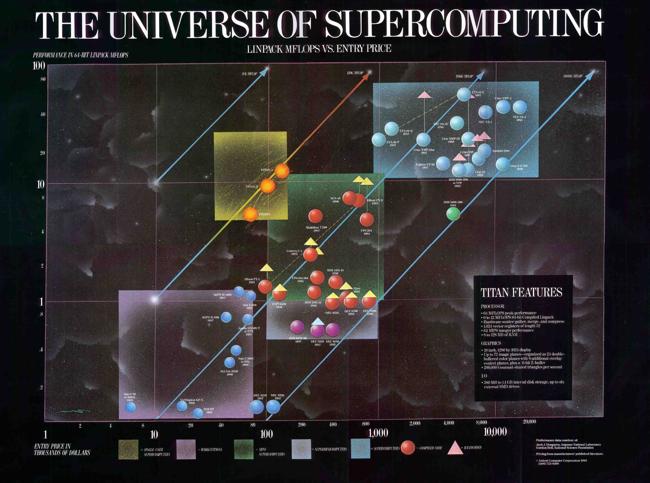

The ranking of world's fastest computer is based on the LINPACK Benchmark. Since 1993, LINPACK benchmark results have been collected by the Top500 project. They announce their results twice a year at international supercomputing conferences. Last week's conference in Leipzig produced this Top 500 List. The next Supercomputing Conference will be in November in Denver.

Tianhe-2's top speed of 33.86 petaflops on the latest Top500 list is nearly twice as fast as number two Titan from Oak Ridge at 17.59 petaflops and number three Sequoia from Lawrence Livermore at 17.17 petaflops.

Benchmark Origins

The LINPACK benchmark is an accidental offspring of the development of the LINPACK software package in the 1970's. During the development we asked two dozen universities and laboratories to test the software on a variety of main frame machines that were then available in central computer centers. We also asked them to measure the time required for two subroutines in the package, DGEFA and DGESL, to solve a 100-by-100 system of simultaneous linear equations. With the LINPACK naming conventions, DGEFA stands for Double precision GEneral matrix FActor and DGESL stands for Double precision GEneral matrix SoLve.

Appendix B of the LINPACK Users' Guide has the timing results. The hand-written notes shown here are Jack Dongarra's calculation of the megaflop rate, millions of floating point operations per second. With a matrix of order $n$, the megaflop rate for a factorization by Gaussian elimination plus two triangular solves is

$megaflops = (\frac{2}{3}n^3 + 2n^2)/(time)/10^6$

In 1977, we chose $n$ = 100 because this was large enough to stress the campus main frames of the day. Notice that almost half of the machines required a second or more to solve the equations. In fact, the DEC-10 at Yale did not have enough memory to handle a 100-by-100 system. We had to use $n$ = 75 and extrapolate the execution time.

The fastest machine in the world in 1977, according to this LINPACK benchmark, was the newly installed Cray-1 at NCAR, the National Center for Atmospheric Research in Boulder. Its performance was 14 megaflops.

Evolution

Jack's notes were the beginning of LINPACK as a benchmark. He continued to collect performance data. From time to time he would publish lists, initially of all the machines for which he had reported results, then later of just the fastest. Importantly, Jack has always required an a postiori test on the relative residual,

r = norm(A*x - b)/(norm(A)*norm(x))

More than once, a large residual has revealed some underlying hardware or system fault.

For a number of years, Jack insisted that the original Fortran DGEFA and DGESL be used with n = 100. Only the underlying BLAS routines could be customized. As processors became faster and memories larger, he relaxed these requirements.

The LINPACK software package has been replaced by LAPACK, which features block algorithms to take advantage of cache memories. Matrix computation on distributed memory parallel computers with message passing interprocessing communication is now handled by ScaLAPACK

The benchmark itself has become HPL, which is described as "A Portable Implementation of the High-Performance Linpack Benchmark for Distributed-Memory Computers". The web page at the Innovative Computing Laboratory at the University of Tennessee, which Dongarra directs, summarizes the algorithm employed by HPL with these keywords:

Two-dimensional block-cyclic data distribution - Right-looking variant of the LU factorization with row partial pivoting featuring multiple look-ahead depths - Recursive panel factorization with pivot search and column broadcast combined - Various virtual panel broadcast topologies - bandwidth reducing swap-broadcast algorithm - backward substitution with look-ahead of depth 1.

36 years

We went from 14 megaflops on the Cray-1 at NCAR in 1977 to almost 34 petaflops on the Tianhe-2 in China in 2013. That's a speed-up by a factor of $2.4 \cdot 10^9$ in 36 years. This is a doubling of speed about every 14 months. That is amazing!

Moore's Law that transistor counts double roughly every 24 months would account for a speed-up over 36 years of only $2^{(12/24)36}=2.6 \cdot 10^5$. Moore's Law that transistor counts double and clock speeds get faster roughly every eighteen months would account for a speed-up of something like $2^{(12/18)36}=1.7 \cdot 10^7$. Neither of these comes close to explaining what we've seen with LINPACK. In addition to faster hardware, the speed-up in LINPACK is due to all the algorithmic innovation represented by those terms in the description of HPL.

Green 500

Many years ago we introduced the Intel Hypercube at a small conference on parallel computing in Knoxville. The machine required 220 volts, but the hotel conference room we were using didn't have 220. So we rented a gas generator, put it in the parking lot, and ran a long cable past the swimming pool into the conference room. When I gave my talk about "Parallel LINPACK on the Hypercube", some wise guy asked "How many megaflops per gallon are you getting?" It was intended as a joke at the time, but it is really an important question today. I've made this cartoon.

Energy consumption of big machines is a very important issue. When I took a tour of the Oak Ridge Leadership Computing Facility a few years ago, Buddy Bland, who is Project Director, told me he could tell when he comes to the Lab in the morning if they are running the LINPACK benchmark by looking at how much steam is coming out of the cooling towers. LINPACK generates lots of heat.

The Green 500 is looking for energy efficient supercomputers. The project also uses LINPACK, but with a different metric, gigaflops per watt. On the most recent list, issued last November, the world's most energy efficient super computer is a machine called Beacon, also in Tennessee, at the University of Tennessee National Institute for Computational Sciences. A new Green 500 list is scheduled to be released June 28th.

Geek Talk

I've only heard the LINPACK Benchmark mentioned once in the mainstream public media, and that was not in an entirely positive light. I was listening to Monday Night Football on the radio. (That tells you how long ago it was.) It was a commercial for personal computers. A guy goes into a computer store. The salesman confuses him with incomprehensible geek talk about "Fortran Linpack megaflops". So he leaves and goes to Sears where he gets straight talk from the salesman and buys a Gateway.

A few years ago a TV commercial for IBM during the Super Bowl subtly boasted about "petaflops". That was also about LINPACK, but the commercial didn't say so.

Criticism

An excellent article by Nicole Hemsoth in the March 27 issue of HPCWire set me thinking again about criticism of the LINPACK benchmark and led to this blog post. The headline read:

FLOPS Fall Flat for Intelligence

The article quotes a Request For Information (RFI) from the Intelligence Advanced Research Projects Activity about possible future agency programs.

In this RFI we seek information about novel technologies that have the potential to enable new levels of computational performance with dramatically lower power, space and cooling requirements than the HPC systems of today. Importantly, we also seek to broaden the definition of high performance computing beyond today's commonplace floating point benchmarks, which reflect HPC's origins in the modeling and analysis of physical systems. While these benchmarks have been invaluable in providing the metrics that have driven HPC research and development, they have also constrained the technology and architecture options for HPC system designers. The HPC benchmarking community has already started to move beyond the traditional floating point benchmarks with new benchmarks focused on data intensive analysis of large graphs and on power efficiency.

I certainly agree with this point of view. Thirty five years ago floating point multiplication was a relatively expensive operation and the LINPACK benchmark was well correlated with execution times for more complicated technical computing workloads. But today the time for various kinds of memory access usually dominates the time for arithmetic operations. Even among matrix computations, the extremely large dense matrices involved in the LINPACK benchmark are not representative. Most large matrices are sparse and involve different data structures and storage access patterns.

Even accepting this criticism as valid, I see nothing in the near future that can challenge the LINPACK Benchmark for its role in determining the World's Fastest Computer.

Legacy

We have now collected a huge amount of data about the performance of a wide range of computers over the last 35 years. It is true that large dense systems of simultaneous linear equations are no longer representative of the range of problems encountered in high performance computing. Nevertheless, tracking the performance on this one problem over the years gives a valuable view into the history of computing generally.

The Top 500 Web site and the Top 500 presentations at the supercomputer conferences provide fascinating views of this data.

Home Runs

The LINPACK Benchmark in technical computing is a little like the Home Run Derby in baseball. Home runs don't always decide the result of a baseball game, or determine which team is the best over an entire season. But it sure is interesting to keep track of home run statistics over the years.

Exaflops

If you go to supercomputer meetings like I do, you hear a lot of talk about getting to "exaflops". That's $10^{18}$ floating point operations per second. A thousand petaflops. "Only" 30 times faster than Tianhe-2. Current government research programs and, I assume, industrial research programs are aimed at developing machines capable of exaflop computation in just a few years from now.

But exaflop computation on what? Just the LINPACK Benchmark? I certainly hope that the first machine to reach an exaflop on the Top500 is capable of some actually useful work as well.

A New Benchmark

In a recent interview in HPC Wire, done at the ISC in Germany, Jack Dongarra echoes some of the same opinions about the LINPACK Benchmark that I have expressed here. He points to a recent technical report that he has written with Mike Heroux titled "Toward a New Metric for Ranking High Performance Computing Systems". They describe work in progress involving a large scale application of the preconditioned conjugate gradient algorithm intended to complement LINPACK in benchmarking supercomputers. They're not trying to replace LINPACK, just trying to include something else. It will be interesting to see if they make any headway. I wish them luck.

- Category:

- History,

- Performance,

- Supercomputing

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.