Comparing Runs in SDI

Today I upgraded a large model to the latest release of MATLAB. I found a trick to compare the results before and after the update that I thought I should share.

Different Results

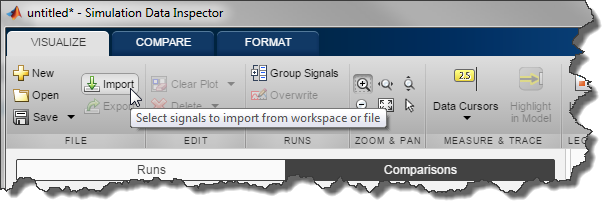

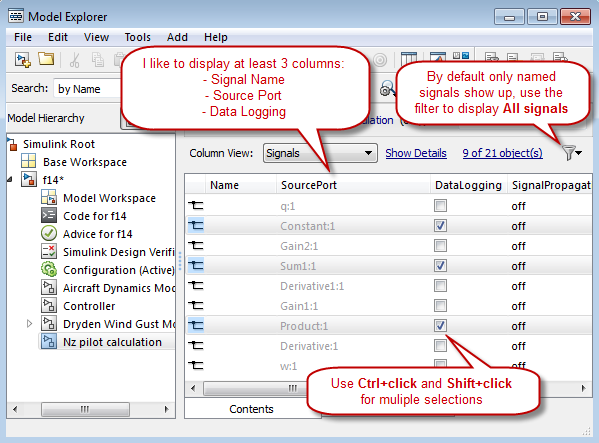

To validate that the model simulates as expected, I decided to log many signals, and compare them. For that, I saved the Simulink.SimulationOutput generated by the model in each release, and imported those in the Simulation Data Inspector.

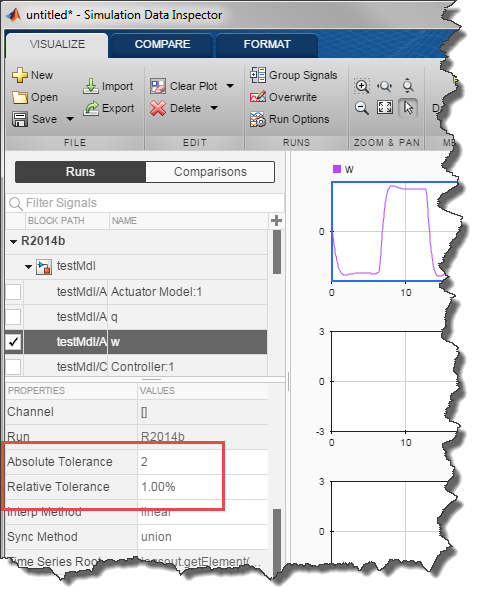

I quickly realized that the results were not matching. This is typical, with floating-point numbers it is usually a bad idea to do a comparison and expect perfect match. Due to floating point round-off errors, it is more appropriate to see if the difference between the signals compared is within a certain tolerance.

Adjusting the Tolerances

In the Runs view, it is possible to specify the tolerances used to decide if a signal passes or fails the comparison.

Now you might ask: If I want to compare a thousand signals to see if the difference for each of them is within 1%, do I need to set them one by one?

Specifying the Tolerances Programmatically

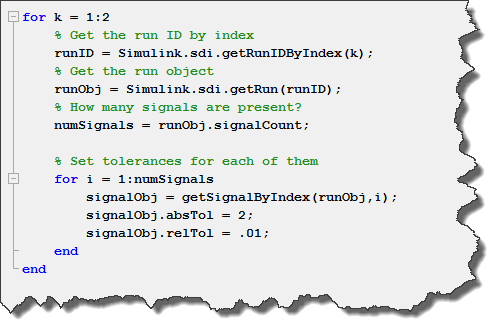

This is where the Simulation Data Inspector programmatic API becomes useful.

With the few lines of code below, it is possible to access the runs, the signals in each run, and get or set their properties:

What I find convenient is that the API works directly on the open Simulation Data Inspector window. This means that I can immediatly go back to the Simulation Data Inspector, run the comparison and observe how close I am to the specified tolerances.

Now it's your turn

Give this a try and let us know what you think by leaving a comment here.

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。