Put some Color in your LEDs: Controlling Your Raspberry Pi from your iPhone with Simulink

This week Mariano Lizarraga Fernandez is back to describe some cool stuff he came up with, using the iPhone and iPad Support from Simulink in combination with the Raspberry Pi Support from Simulink.

Introduction

Color-controlled lighting has become increasingly popular, and, although sometimes it is purely ornamental, there is enough scientific evidence to show that the color of the light in your environment has a direct impact on your circadian rhythm.

There are multiple options in the market to buy off-the-shelf "Smart" lightbulbs and LED strips, that you can control with your mobile phone. However, wouldn't it be fun to build your own with Simulink?

In this post, we'll show you how you can do just that using Simulink, a Raspberry Pi with a Sense HAT add-on board, and your iPhone.

Producing Color Light using the Raspberry Pi's Sense HAT

The Sense HAT is a full featured add-on board that was developed specifically for the Astro Pi mission. It has a 9-DOF IMU (3 Accelerometers, 3 Gyros, and 3 magnetometers), a humidity sensor, a temperature sensor, a barometric pressure sensor and an 8 x 8 RGB LED matrix. In R2017a we introduced support for all these in our popular Hardware Support Package.

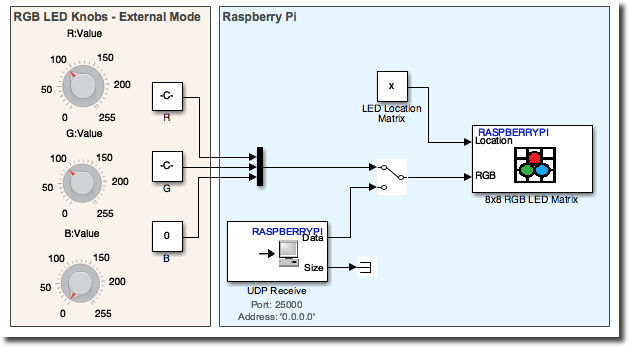

The Simulink block for the LED Matrix allows you to control the RGB value of each LED individually or all together. To test this, you can create a Model that would allow you to either change the RGB value of the LEDs running in External mode, or by reading in an UDP datagram:

In this way, you can press play and interact with LEDs directly from Simulink or deploy it to the target and control the LEDs from another device on the same network.

Controlling your Raspberry Pi from your iPhone

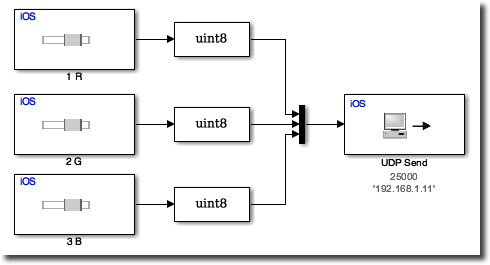

Now it is time to control those LEDs directly from your iPhone. Using the iPhone Support Package we can lay out a model where using three sliders and a UDP Send block we can send the datagram to the Raspberry Pi. It really is that simple!

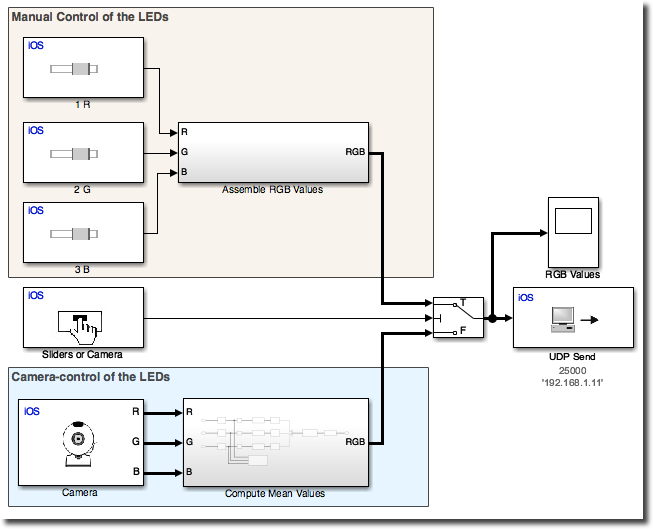

Now it is time to add some more functionality to the model. Let's try to change the color of those LEDs based on the average color of what the iPhone's back camera is looking at. To do that, we need to use the Camera block to read in the video stream. With that, we can compute the average value of each channel and send it out as a command to the LEDs. To reduce the flickering of the LEDs we can add a 5 Hz single-pole low-pass filter.

Putting it all Together

Finally, we can use both the sliders and the image-averaging in a single model and directly control which sets the LEDs' color with a Button.

To see the actual values sent to the Raspberry Pi we can use a new feature introduced in R2017a: Simulink Scope in Mobile Apps

You can see it yourself in action in the following video:

Now it's your turn

If you are using the Sense HAT in your Raspberry Pi projects you can now take full advantage of the support introduced in R2017a. On the iOS Support package, the newly introduced support for the Scope block gives you access to plotting of the variables directly on your iPhone screen.

Give it a try and let us know what you think by leaving a comment below.

评论

要发表评论,请点击 此处 登录到您的 MathWorks 帐户或创建一个新帐户。