Different Results in Accelerated Mode Versus Normal Mode

By Guy Rouleau

By Guy Rouleau

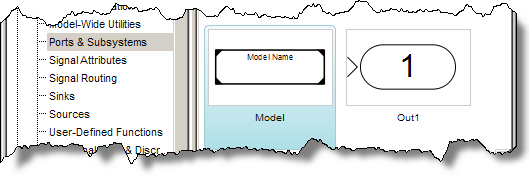

This week I received a large model giving different results when used as a referenced model in Accelerated mode, compared to the Normal Mode. To give you an idea, the top model in this application looked like this:

After a quick inspection of the model, I found nothing obvious. Accelerated mode uses code generation technology to run the simulation. Real-Time Workshop Embedded Coder offers the Code Generation Verification (CGV) API to verify the numerical equivalence of generated code and the simulation results. Using this API, I decided to test the code generated for this referenced model. Here is how it works.

Log Input Data

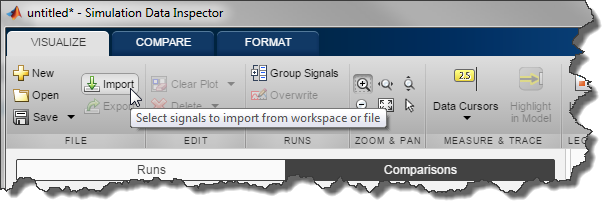

To begin, I enabled signal logging for the signals entering the referenced model in Normal mode. Then I played the top model. This data will allow me to test the Controller subsystem alone, without the plant model.

Setup the model to be analyzed

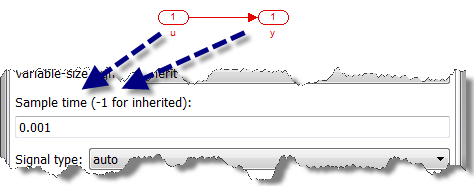

From now on, I only need the referenced model. In this model, I enabled data import from the model configuration, so that the previously logged data can be read by the Inport block.

Then I enabled signal logging for the signals I want to compare. For a first run, I logged only the controller output, to confirm that this setup reproduces the issue.

Write a test script for SIL testing

The CGV API is designed to perform Software In the Loop (SIL) and Processor In the Loop (PIL) testing for a model. To write a script validating the numerical equivalence of the generated code, I started with the documentation section Example of Verifying Numerical Equivalence Between Two Modes of Execution of a Model. Based on this example it took me only a few minutes to write the following:

cgvModel = 'Isolated'; % Configure the model cgvCfg = cgv.Config( cgvModel, 'Connectivity', 'sil') cgvCfg.configModel(); % Execute the simulation cgvSim = cgv.CGV( cgvModel, 'Connectivity', 'sim') result1 = cgvSim.run() % Execute the generated code cgvSil = cgv.CGV( cgvModel, 'Connectivity', 'sil') result2 = cgvSil.run() % Get the output data simData = cgvSim.getOutputData(1); silData = cgvSil.getOutputData(1); % Compare results [matchNames, ~, mismatchNames, ~] = ... cgv.CGV.compare( simData, silData, 'Plot', 'mismatch')

At the completion of this script, the following figure appeared, confirming that the results from the generated code are different from the simulation.

Identify the origin of the difference

To identify the origin of the difference, I enabled logging for more signals in the model. After a few iterations, without modifying the above script I identified the following subsystem where the input was identical, but the output was different:

When looking at the generated code, I found out that the line for this subsystem is:

(*rty_Out2) = 400.0F * (*rtu_In1) * 100.0F;

I made a quick test and manually changed the code to:

float tmp;

tmp = 400.0F * (*rtu_In1);

(*rty_Out2) = tmp * 100.0F;

Surprisingly, this modified code produces results identical to simulation!

Updated 2011-03-22: Note, this doesn't always work. More information below.

Consult an expert

I have to admit, I had no idea how these two similar codes could lead to different results, so I asked one of our experts in numerical computation.

I learned that some compilers use a technology called extended precision. With this technology, when you have a line of code including more than one operation, the compiler can use a larger container to store intermediary results. The goal of this technology is to provide more accurate results, however in this case it also leads to surprises.

After understanding this behavior, we recommended to the user a few options to avoid this type of expression folding in the generated code and consequently avoid this behavior of the compiler.

Updated 2011-03-22: Note, the root cause of this difference is not the RTW Expression Folding option, that just happens to work around the behavior of the compiler. The right way to prevent the compiler from using extended precision is to provide the compiler flags that force it to do the computation as written.

Conclusion

Without the Code Generation Verification API, I would have spent a lot of time wiring debugging signals in the model. This tool helped me to quickly identify the root cause of the problem without modifying the model. Note that the CGV API can do a lot more than what I showed here. Look at the CGV documentation for more details.

Now it's your turn

How do you verify that the generated code is numerically equivalent to your model? Leave a comment here.

Comments

To leave a comment, please click here to sign in to your MathWorks Account or create a new one.